Spectral Graphic Notation

This project takes audio input files, converts them to a CSV off FFT data, and reads the file into a processing project to generate graphic musical notation. Audio analysis and data formatting is done in Python. Visualization is done in processing. The goal of this project is to provide a new means of generating graphic musical notation. There are currently no tools which I find satisfactory for doing so. This has applications for composers, educators, and artists. The project is heavily inspired by Xenakis and Cornelius Cardew's "Treatise".

A tool for generating graphical music notation based on audio files.

Predictive Audio Machine Learning

Python Project for synthesizing new audio based on an initial corpus, using Scikit machine learning. Before training models and predicting, the audio is clustered. The primary method is a time-windowing approach with SVR. The example material was generated from a corpus of my own music.

A method for re-synthesizing audio predictions using machine learning models.

Wander Delay

This prototype is useful for swirling delays, granular textures, and room ambience.

A prototype granular delay plugin written in JUCE.

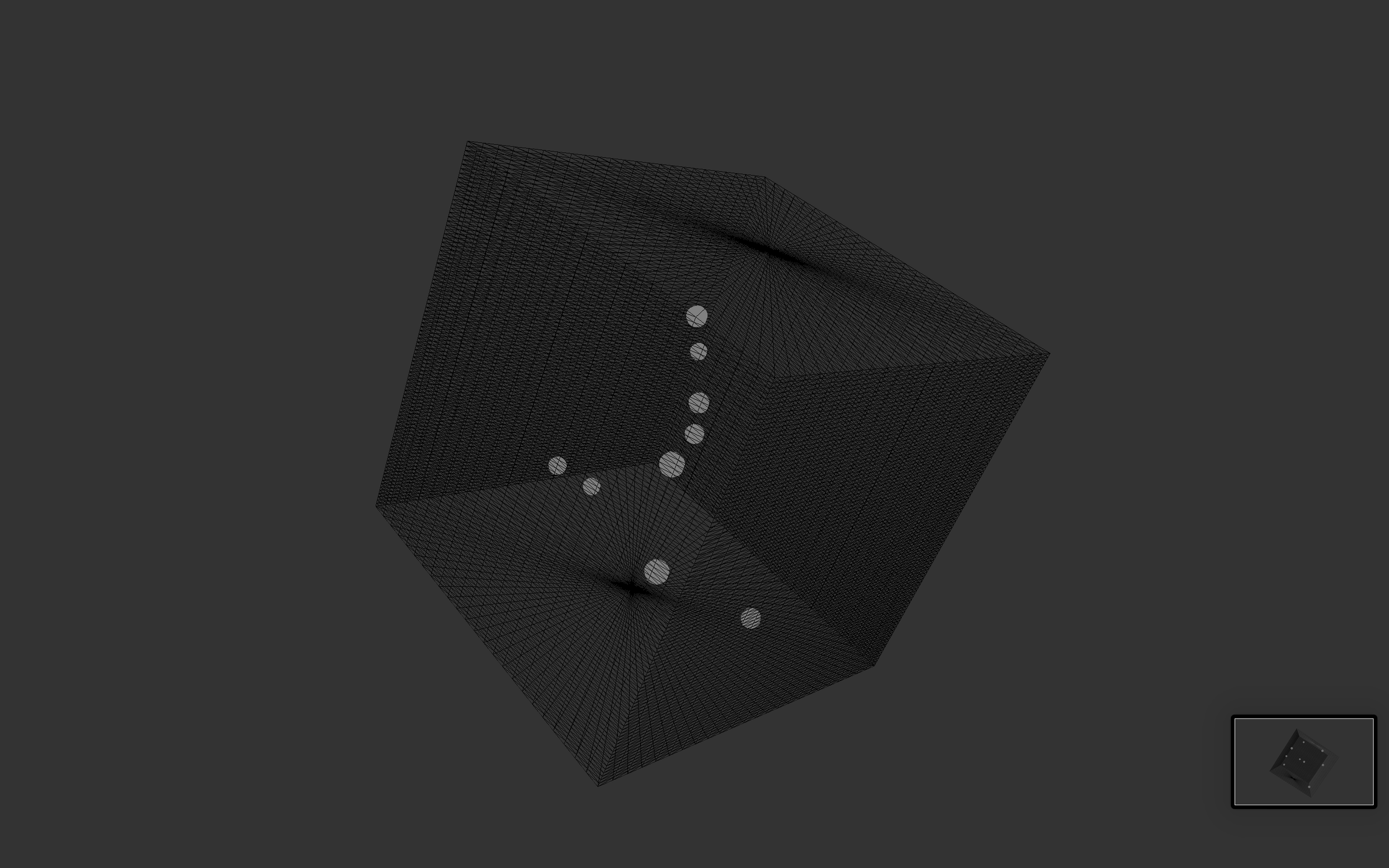

Algorithmic VBAP for Cubic Space

This project is was a test in spatializing sounds based trajectories from iterative functions. The patch creates a cubic space in which individual sound objects are panned via updating matrix coordinates. The method of spatialization is vector based amplitude panning. The visual space monitoring was done in Jitter. Early tests in UCSB’s AlloPortal were promising. Further development is being done to realize the project in C++.

MAX MSP Spatial Audio Prototype

Top Row: Placement of sound objects in monitor cube

Botton Row: Source system of spatialized particles

SWELL -

Sonifying Waves Electronically (with) Live Location (data)

Ambient Surfline Radio

Supercollider prototype. Software takes surf input data from the PySurfline API (accessing live Surfline data in Python). Data is read into Supercollider and sonified generatively with seed values. “Radio” prototype runs indefinitely with infinite variation. Below are results from various tests.

La Sonification de la Planète Sauvage (2022)

A visual sonification experiment in Max MSP.

view max patch

This project was an experiment in sonifying visual information via Max MSP. Data was interpreted through parameters to feed synthesizers and granular audio processing. Source images are from the film Fantastic Planet (1973). This project is only for academic purposes.

Python Audio Manipulation Test - No External Libraries

This project explored audio manipulation in Python only using native libraries.

Audio Test Examples:

The code does as follows: The user provides an audio clip. The program then spits out a random number of clips each based on different segments of the original clip. The segments are a random length and location of the original clip. The resulting segments are then sped up or slowed down by a random amount. This tool is useful for sound design and resampling, independent of a DAW.